Online meetings continue to grow in popularity - with a CAGR of nearly 8% through 2032, but online meetings are also a growing source of fraud. Yes, meeting online enables working from home and cuts on work travel expenses. However online meetings are no longer as safe as they used to be.

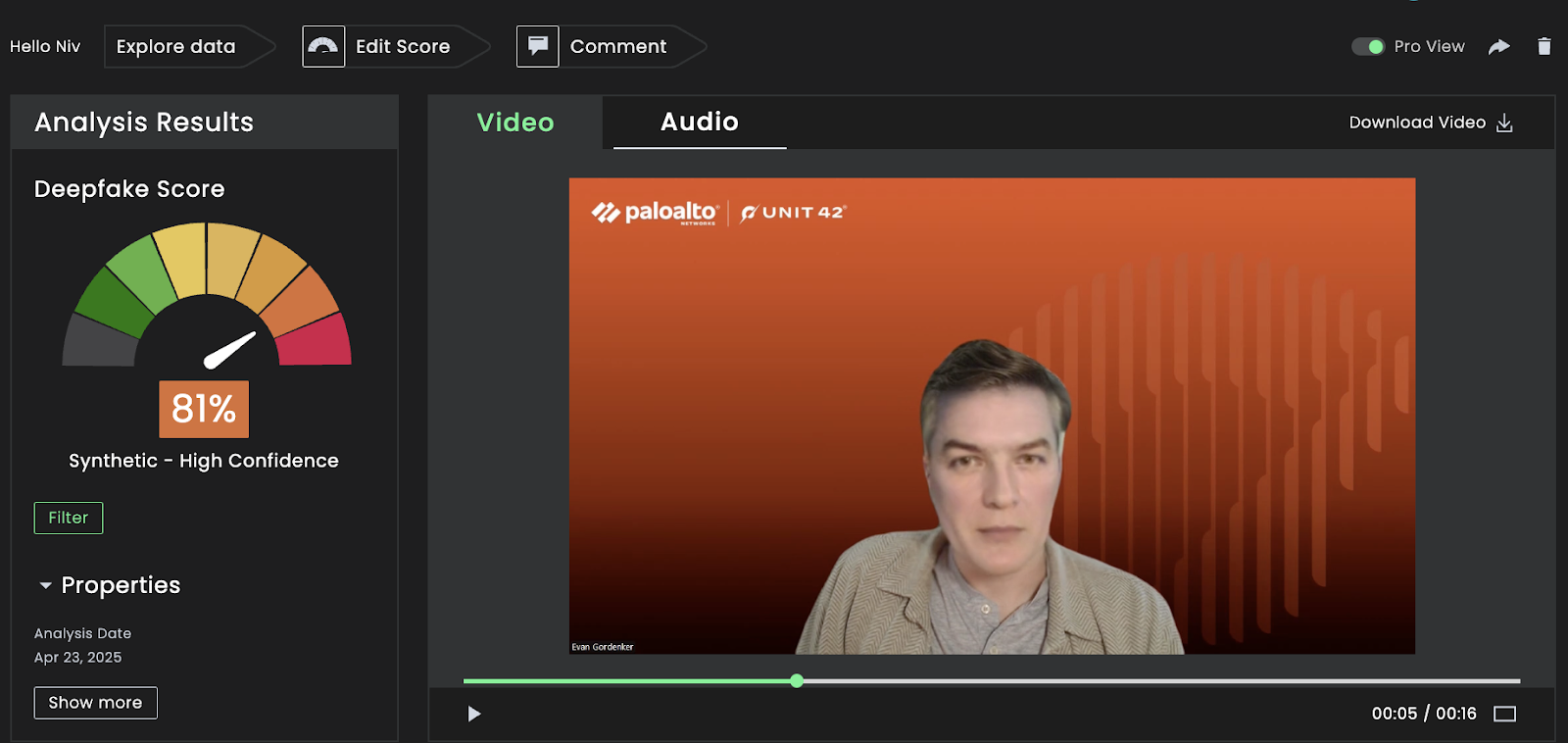

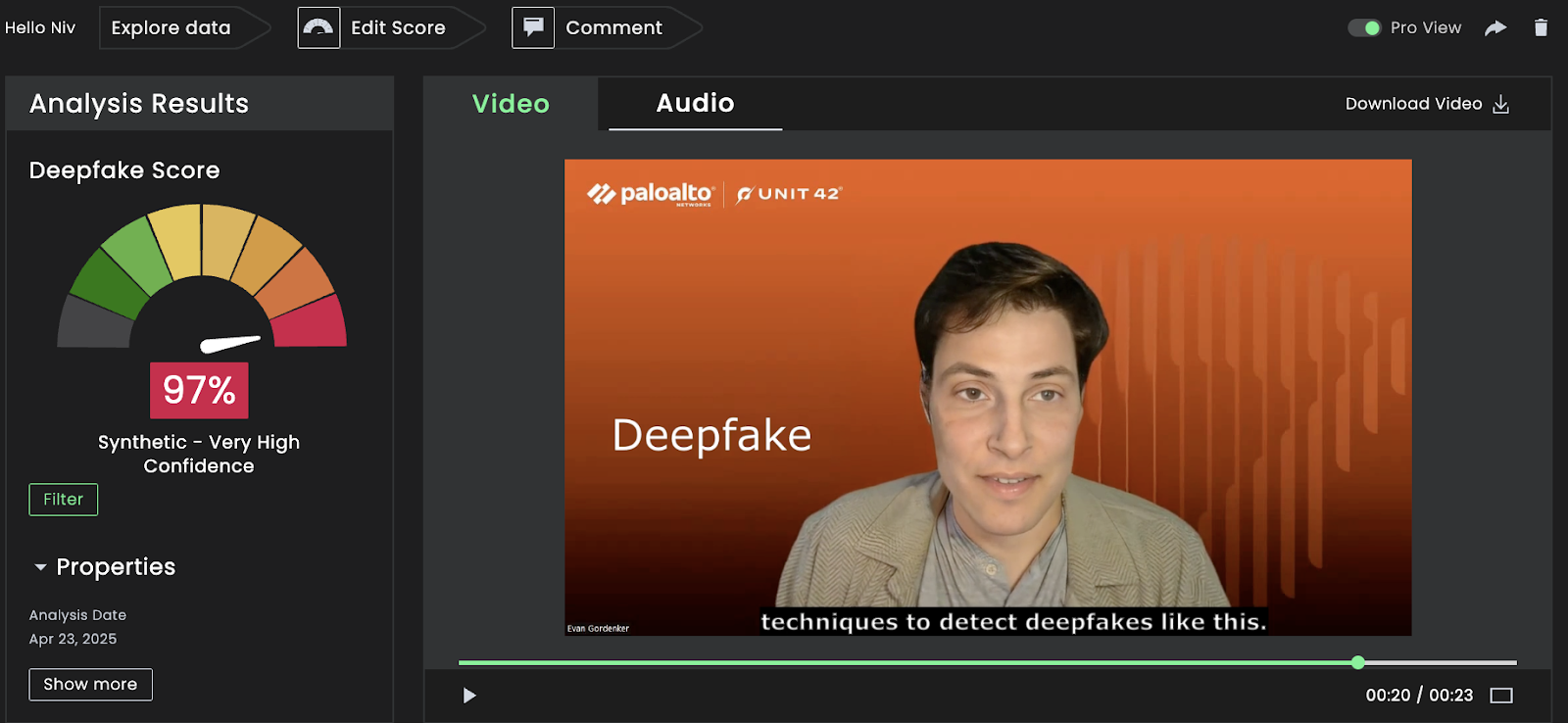

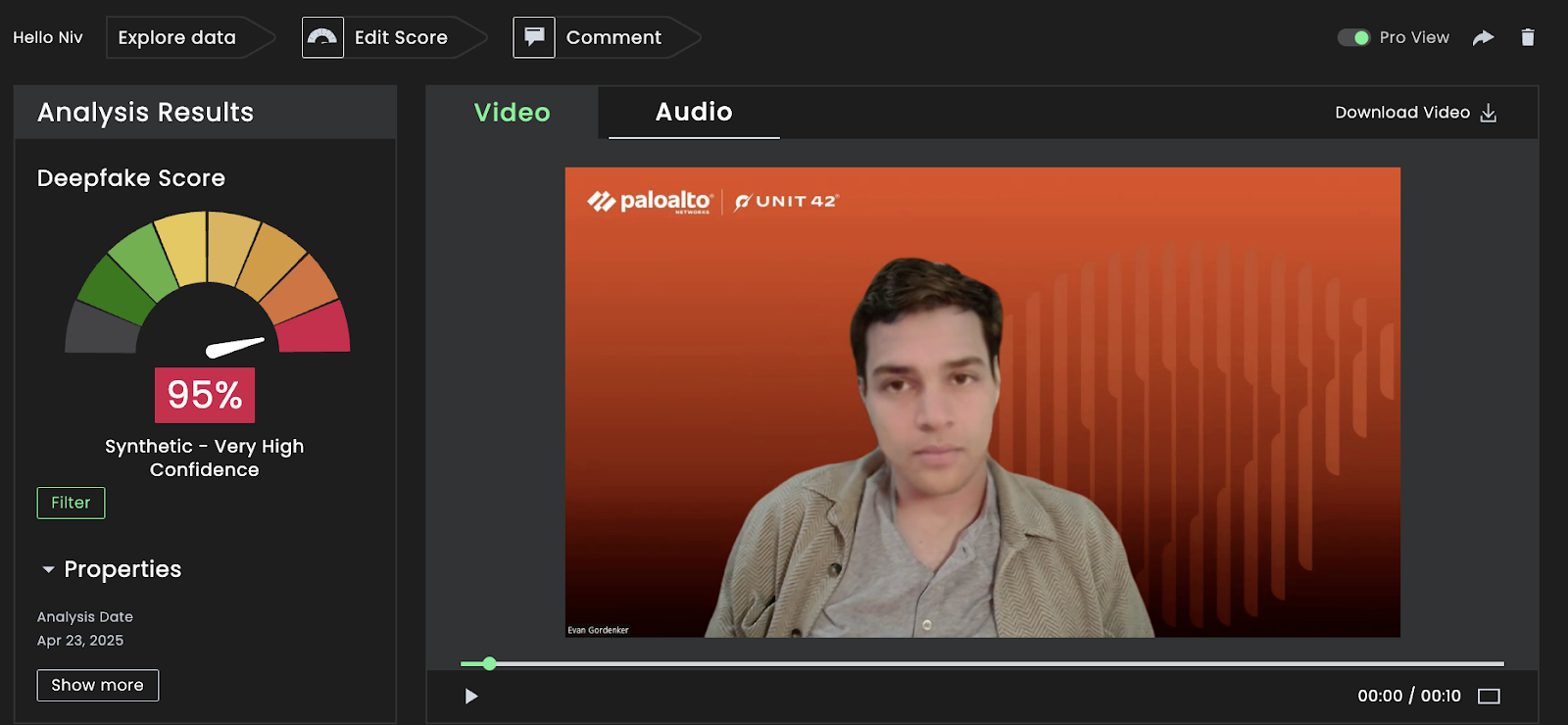

The rise of sophisticated technologies like deepfakes means malevolent actors can exploit online meetings to commit fraud by convincingly impersonating a real person.

Cisco Webex is a platform for online meetings and the company implements a comprehensive security architecture to combat fraud and protect users.

Graded Security for Meeting Types

Webex offers different meeting types, and layers up security standards depending on the meeting type the user selects. Standard meetings provide a baseline level of security with encryption for signaling and media within the Webex cloud.

For enhanced privacy, private meetings allow organizations to keep all media traffic on their premises, preventing it from cascading to the Webex cloud.

You can also apply end-to-end encryption for meetings to get the high levels of security, ensuring that only participants have access to the meeting's content encryption keys.

It’s all in the Webex Control Hub where administrators can assign and select appropriate meeting types based on the sensitivity of the information being discussed. Host controls allow for the management of meeting participants, including admitting users from the lobby and verifying user identity information.

Zero Trust

Zero Trust is a security framework that assumes no user or device is trusted by default, regardless of their location or network. Webex Meetings implement Zero Trust through End-to-End Encryption (E2EE) and End-to-End Identity (E2EI).

E2EE ensures that only meeting participants have access to the meeting encryption keys, preventing even Cisco from decrypting the meeting content. This approach enhances privacy and confidentiality, as the Webex cloud cannot access the meeting data.

E2EI verifies the identity of each participant through verifiable credentials and certificates issued by independent identity providers. To ensure secure access to Webex services, users download and install the Webex App, which establishes a secure TLS connection with the Webex Cloud.

The Webex Identity Service then prompts the user for their email ID, authenticating them either through the Webex Identity Service or their Enterprise Identity Provider (IdP) using Single Sign-On (SSO).

Upon successful authentication, OAuth access and refresh tokens are generated and sent to the Webex App. This prevents impersonation attempts and ensures that only authorized individuals can join the meeting.

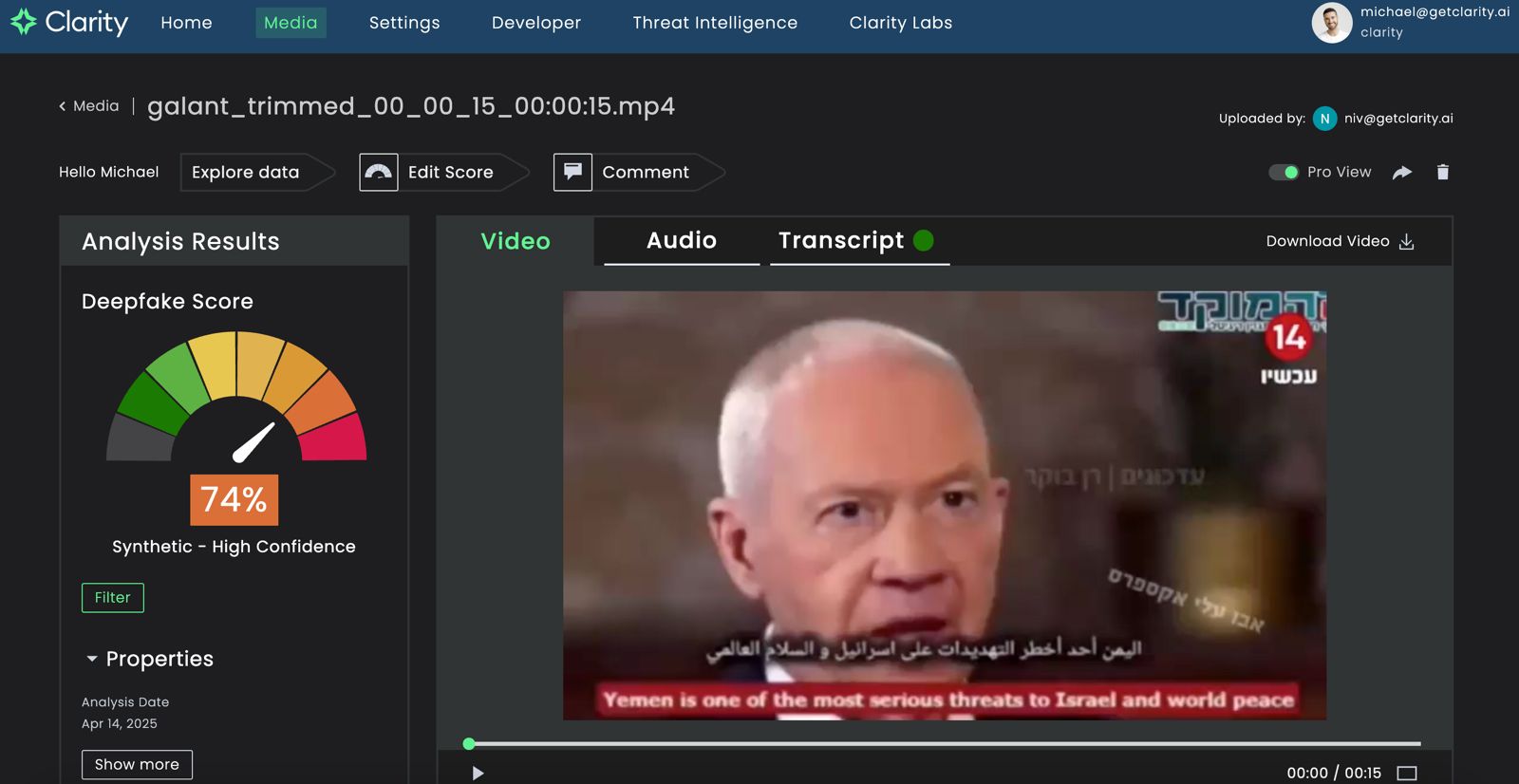

Protecting Against Deepfakes

With deepfakes becoming such a big concern, Webex now equips hosts with tools to check the validity of a user's identity and vet individuals before admitting them to the meeting.

Hosts can view the names and email addresses of those in the lobby, and even see if they are internal to their organization or external guests. This allows them to screen participants and prevent unwanted attendees from joining. Verified users have a checkmark next to their name, while unverified users are clearly labeled.

What’s more, meeting security codes in Webex protect against Man-in-the-Middle (MITM) attacks by displaying a code derived from all participants' MLS key packages to everyone in the meeting.

If the displayed codes match for all participants, it indicates that no attacker has intercepted or impersonated anyone in the meeting. It assures participants that they agree on all aspects of the group, including its secrets and the current participant list.

Beyond deepfakes, Webex addresses toll fraud and eavesdropping. It allows administrators to disable the callback feature to certain countries, mitigating the risk of toll fraud from high-risk regions. It also enables audio watermarking allowing organizations to trace the source of any unauthorized recordings and deter eavesdropping

Latest Webex Security Features

Cisco continuously updates Webex with new security features to stay ahead of evolving threats. A new feature is Auto Admit which allows authenticated, invited users to join or start meetings without waiting for the host, streamlining the meeting process while maintaining security.

Additional lobby controls for Personal Rooms provide more granular control over access, reducing lobby bloat and the risk of meeting fraud. External and internal meeting access controls enable administrators to restrict participation based on user domains or Webex sites, further enhancing security.

Feature controls for both external and internal Webex meetings allow administrators to disable or restrict specific functionalities, such as recording or screen sharing, to prevent unauthorized access or leakage of sensitive information.

Security Roadmap

Webex plans to expand its End-to-End Encryption (E2EE) capabilities. In the near term, E2EE will be extended to one-on-one calls using the Webex App and Webex devices, and to breakout rooms within meetings.

Looking further ahead, Webex aims to integrate Messaging Layer Security (MLS) support for all meeting types. This will enable End-to-End Identity verification for all meetings and introduce dynamic E2EE capabilities, allowing for seamless encryption adjustments during meetings – to counter a threat that’s equally dynamic.

It’s multi-layered security approach that includes Zero Trust principles, encryption, and anti-deepfake measures – all working together to provide a robust shield against online meeting fraud.

As AI-driven phishing and deepfakes become increasingly sophisticated threats to online communication, the security of platforms like Cisco Webex is important. It is encouraging to see how Cisco's multi-layered approach demonstrates a commitment to safeguarding online interactions.